Building an AI chatbot to handle Amazon’s Help & Customer Service

Build a chatbot using vector search, OpenAI and langchainrb

In 2023, Amazon had a revenue of $554.03B. Considering the scale of Amazon's operations and the complexity of its services, it's reasonable to speculate that its spending on customer support is likely to be a significant figure, potentially running into millions of dollars.

Objective

Our objective in this blog is to create a chatbot that is an expert in Amazon’s Help & Customer Service. The chatbot should be able to answer queries from Amazon’s Customer Service Page. Hopefully, this app will help Amazon save a couple of million dollars in Customer Support spending 😅.

On this blog, our limitation is that we will only build the back end of the chatbot. We’ll do UI and other improvements later.

Tech Stack

PostgreSQL - We’ll use PostgreSQL with a vector search extension. Why use PostgreSQL? PostgreSQL is mature and well-established, which means it has a wide range of features, strong community support, and extensive documentation.

pgvector - What is pgvector? pgvector is a PostgreSQL extension for vector similarity search.

langchainrb_rails - Think of it as searchkick for elasticsearch, but this gem is specifically for AI.

langchainrb - Think of it as a framework for integrating AI with Ruby applications.

neighbor - This gem is responsible with vector search in PostgreSQL.

OpenAI - Large Language Model that we will use.

Workflow

This is the workflow of how we’ll get the embedding for the Policy model.

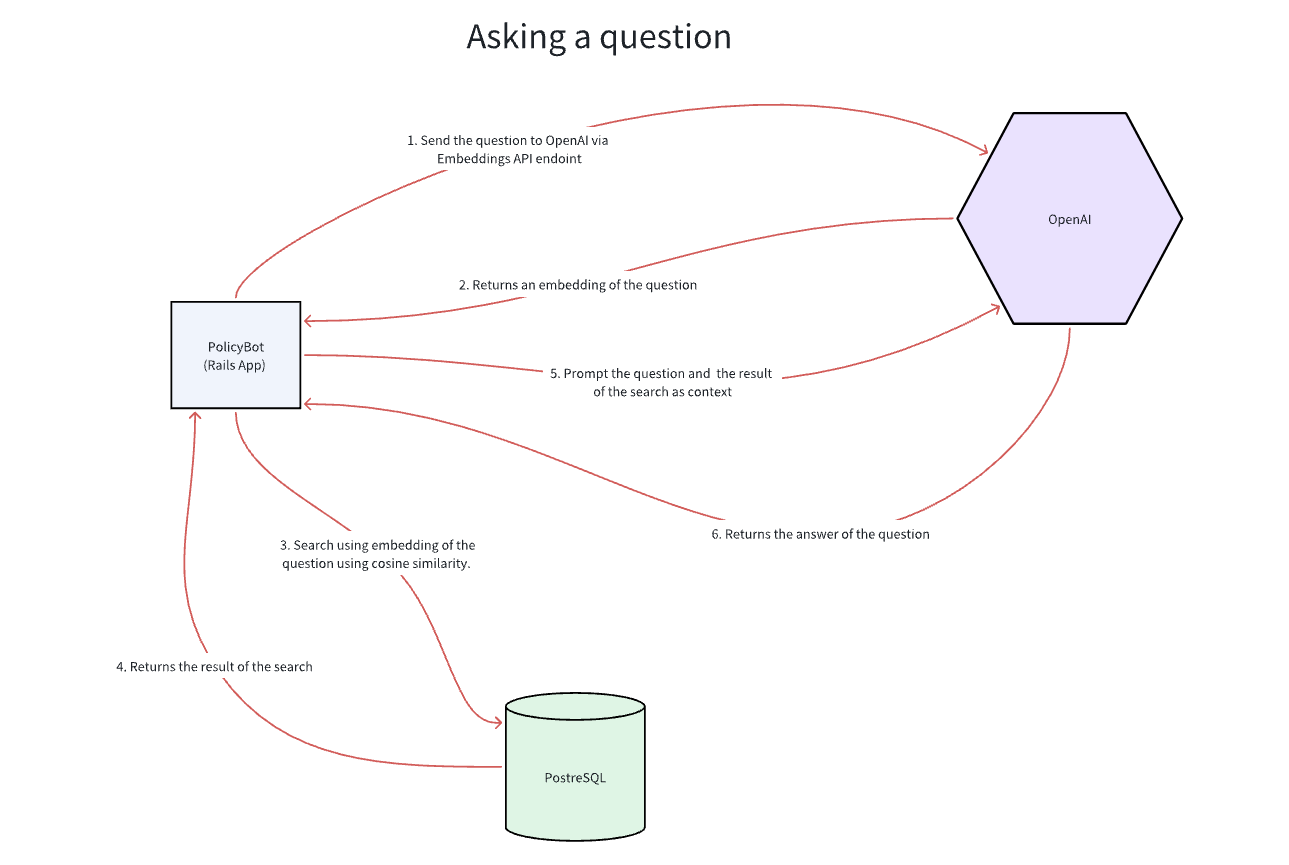

This is the workflow of how we’ll ask a question.

Implementation

Step 1

Let’s create a new rails app.

Step 2

Let’s create a Policy model. This is where we store Amazon’s help & customer service.

Step 3

We then update seeds.rb to save the policy pages of Amazon on the Policy model. The seeds.rb should look like this:

Step 4

The next step is to install langchainrb_rails

Step 5

After install langchainrb_rails , the next step is to run the rails generator to add vectorsearch to the Policy model.

Step 6

The next step is to generate embeddings of the Policy model. In the console, do this:

Step 7

After embedding, the next step is to set up a connection to OpenAI's GPT-4 model using the Langchain library, configure a vector search mechanism, and then use this setup to process and respond to a question.

Step 8

Now, it's time to test the chatbot in the terminal.

Hello there!

Do you have a startup idea or an exciting project you’re passionate about? I’d love to bring your vision to life!

I’m a software developer with 13 years of experience in building apps for startups, I specialize in Rails + Hotwire/React.

Whether you’re looking to innovate, grow your business, or bring a creative idea to the forefront, I’m here to provide tailored solutions that meet your unique needs.

Let’s collaborate to make something amazing!

Sincerely,

Ademar Tutor

hey@ademartutor.com